Good News! You No Longer Need to Generate Documentation Manually

And yet, it’s critically important to produce up-to-date documentation. Most front-end developers don’t understand back-end development, and your API documentation gives them—and anyone else who plans to use your application—a critical source of information about how the back-end works.

But even though we all know this, most back-end developers fail to reliably produce documentation… and our own developers were no exception.

In this article, we’ll walk you through our own struggle to reliably produce API documentation, and how we developed a simple, step-by-step process that automatically generates our documentation—a process which you can deploy today.

Our Documentation Struggle Probably Sounds a Lot Like Yours

When we began to develop multiple products for our company — StartupCraft Inc. — we put a standard documentation process in place. Every time our back-end developers released a new feature, they had to manually update their documentation and notify our front-end engineers of the change.

But we were in constant crunch mode. Our API was in a constant state of flux. Our back-end was continuously being updated. And because our back-end engineers were overwhelmed with mission-critical, time-sensitive development, they often lacked the bandwidth to maintain their documentation.

The result: Our front-end developers always felt behind, and they began to contact our back-end engineers every single day just to get a brief on any un-documented changes.

We knew we couldn’t stay on this path. At StartupCraft Inc., we create clean, efficient, productive workflows, and our documentation process was messy, inefficient, and unproductive. Our environment became hard to work within, and it just didn’t align with our values. So we set out to create a pristine solution for our own internal operations—and to help community members, like you, who suffered similar challenges.

Our Technology Stack

Before we dive into our solution attempts, here’s our technology stack. We were reviewing SPA (Single Page Applications) and API as separate applications, and we used React and Ember on our front-end, and Rails API on our back-end.

Our Realization: Manual Documentation Generation Will Always Fail

At first, we simply reversed our existing manual process. We simply asked our back-end developers to create each feature’s documentation before they actually implemented it.

This fix felt promising. We were using Apiary for our API documentation, letting our back-end engineers mock up documentation that our front-end engineers could use as their real API with minimal adjustment. After our back-end engineers finished the feature’s actual logic, all the front-end engineers had to do was change the endpoint from the mock-up to the real one.

But we were also following the JSON API specification, which made it hard to build out a feature’s full structure on paper. We tried to fix this by utilizing a general format, but even that required substantial adjustments and numerous exchange problems.

Ultimately, we had to scrap this solution, and admit a hard truth — manual documentation will always be too complicated, and thus will always fail. From this realization, we decided to simplify the process by developing a fully automated documentation generation process.

How We Automated Our Documentation Generation

We began our research, and quickly uncovered a promising lead. We noticed that developers usually look at tests first, as tests produce the most relevant example for each specific function. What’s more, tests naturally connect to tons of other processes, and developers already have to run tests when building functions.

Realizing this, the solution became obvious—we just needed to find a way to automatically generate documentation out of the testing processes. This realization led us to find a great gem, the RSpec API Doc Generator.

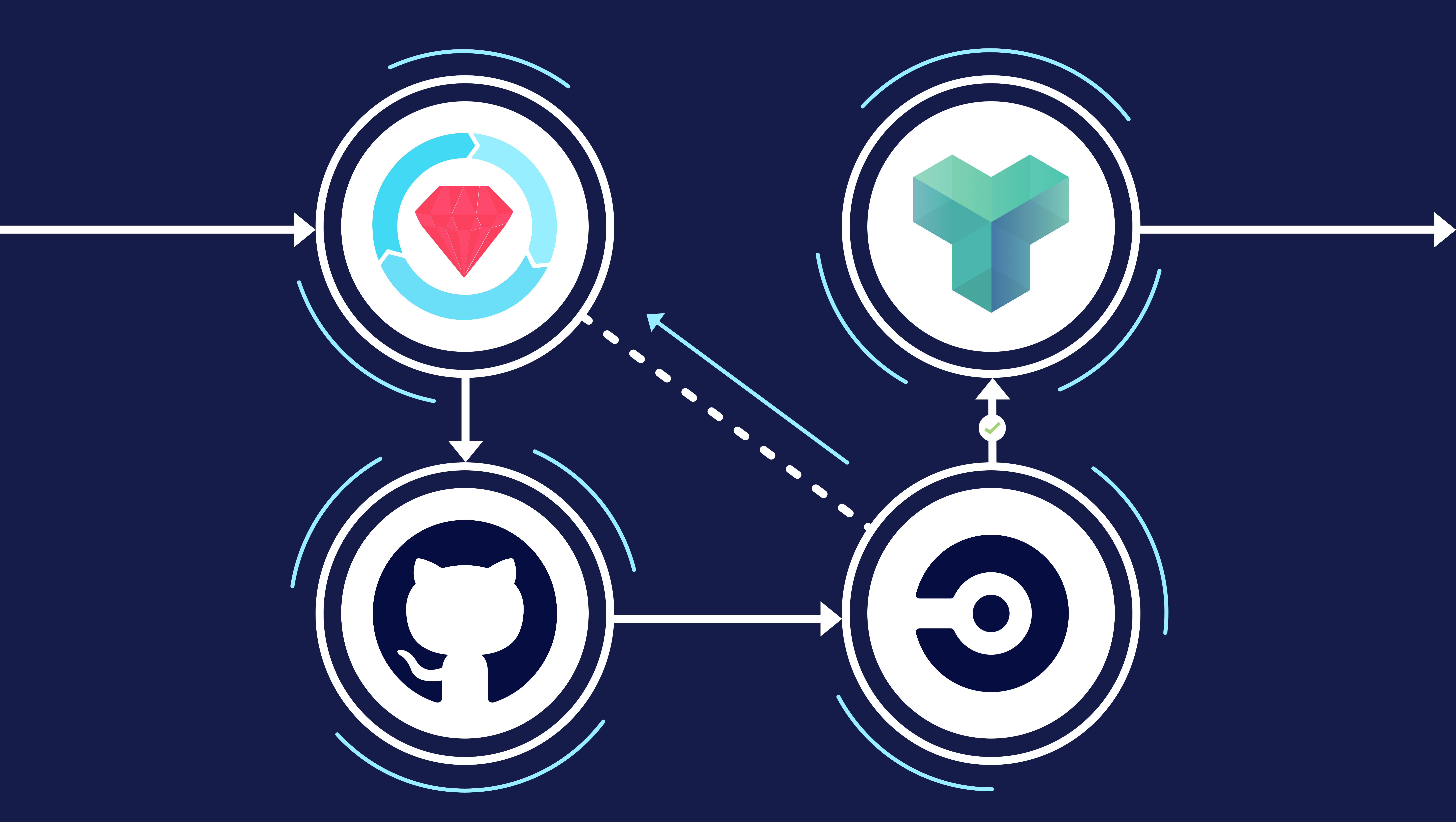

Armed with this amazing library, we quickly set up a process that automatically generates documentation out of the testing suite in just four simple steps:

- Add the right gem to gemfile

- Initialize and configure the gem

- Generate documentation from tests

- Publish the documentation

These four steps immediately solved our documentation challenge, and we think it might solve yours too.

So let’s take a look an in-depth look at each step in our automated documentation process, and show you how to give this solution a try.

Setting Up Your Trial Project

Please note, for the sake of brevity, we will not be covering the underlying application architecture. But to test our process, just follow these steps:

- Create a new project from scratch, or simply utilize this sample project.

- Find the Apiary documentation for this sample project here.

- If you need to create a new API on Apiary, click here, sign up for a new account, and select the “Start Your API in API Blueprint” option.

- After you create your API, connect it to the GitHub Repo. In our example, enter {APIARY_API_NAME}/settings and hit “Commit and start Sync” to push the commit to your repo, and sync it with Apiary. (Later, you’ll have to remove this sync, to make sure Apiary doesn’t mirror all the commits back to master.)

1. Add the right gem to apiaryio’s gemfile

Inside your project, open the Gemfile and add the “rspec_api_documentation” gem. (Make sure you have ‘rspec-rails’ in the development group.) And please note, we use master branch from GitHub. We found they have the most recent updates for blueprint generation of the documentation.

Gemfile

group :development, :test do

gem 'rspec-rails', '~> 3.5'

end

group :test do

gem 'rspec_api_documentation', '~> 6.1.0'

end

2. Initialize the gem

Here’s one configuration example, from one of our projects working in production (but for your own, you’ll find the detailed documentation here):

spec/rails_helper.rb

RspecApiDocumentation.configure do |config|

config.api_name = 'generating-auto-documentation'

config.format = :api_blueprint

config.request_headers_to_include = ['Authorization', 'Content-Type']

config.response_headers_to_include = ['Content-Type']

config.request_body_formatter = :json

end

3. Generate documentation from tests

At this point, you want to check to make sure your tests are actually generating the right documentation. Let’s use an example of the endpoint getting the current users.

In this example, we created an endpoint as `/api/v1/user`. Here, if the token from the request headers is valid, you should receive the user’s profile data, via a 200 status code and a body that contains valid JSON user structure. If it’s not valid, you should receive an error with status code 401, Unauthorised.

Here’s the test example:

spec/acceptance/api/v1/user_spec.rb

# frozen_string_literal: true

RSpec.describe 'Users' do

resource 'Current user endpoint' do

route '/api/v1/user', 'Current user endpoint' do

get 'Get current user' do

context 'when user is authenticated', :auth do

example_request 'Responds with 200' do

expect(status).to eq(200)

end

end

context 'when user is not authenticated' do

example_request 'Responds with 401' do

expect(status).to eq(401)

end

end

end

end

end

end

This next test will seem identical to the above test. It will also demonstrate the output for the two cases we described above, and you will again want to test getting the current user both with, and without, the token. For the request without token you should also receive a response with a 401 status code, and in case of token presence you should get a response with a 200 status code, and the body should contain valid JSON user structure.

While the two tests seem identical, they are differentiated by the :auth param in the context. Essentially, in this test, you will authenticate the user using the Knock library, where you will check the “authorization” request header. This is a shared context, described by this next code snippet:

spec/support/shared_contexts/authenticated_user.rb

# frozen_string_literal: true

RSpec.shared_context 'with authenticated_user' do

let(:authenticated_user) { create(:user) }

before do

token = Knock::AuthToken.new(payload: {sub: authenticated_user.id}).token

header "Authorization", "Bearer #{token}"

end

end

4. Publish the documentation

You’re free to use any CI tool you’d like, however we use CircleCI in our projects, as it offers sophisticated options that let us configure the whole documentation process. We will likely produce a separate article on how we like to configure CircleCI. But for now let’s just look at how we configure it for documentation generation only.

Here’s how we configure our workflows to ensure test runs are mandatory, no matter what branch you’re on:

.circleci/config.yml

workflows:

version: 2

build_and_generate_docs:

jobs:

- build

- generate_docs:

requires:

- build

filters:

branches:

only: master

We use master branches as our staging environment, so we configure CircleCI to initiate document generation right when the new PR gets merged into a master branch. Here’s our task for generation documents:

.circleci/config.yml

generate_docs:

<<: *defaults

steps:

- checkout

- *install_bundler

- *restore_cache

- *install_dependencies

- *save_cache

- *setup_database

- run:

name: install apiaryio gem

command: gem install apiaryio

- run:

name: build documentation

command: bundle exec rake docs:generate

- run:

name: publish documentation

command: apiary publish --path="doc/api/index.apib" --api-name="generatingautodocumentation"

We extracted a few steps to use as references, as there are multiple general tasks sitting between test running, and the document generation script. But you can find the full code excerpt—with all of the steps described in full — here.

Bring Automated Document Generation to Your Organization

The above process offers a truly elegant solution to the documentation problem. After a new code enters the master branch, the process automatically runs tests, generates documentation, and publishes those documents.

Now, even though this process is elegant, we do admit that it’s not perfect. You will have to manage different parameters and filters, and you still need your engineers to follow the system (though we’ve found that engineers are happy to simply write these tests if it means they don’t have to write documentation).

But overall, we’ve found that this process makes it much, much easier to maintain your documentation. It saves your back-end engineers a lot of time, it greatly improves their communication with the front-end, and it lets everyone move past the daily documentation struggle and get back to focusing on the work they love.

So give this process a try, and let us know how it worked for you. And if you’ve already solved your documentation challenges, then feel free to let us know what new productivity challenge you are currently struggling with and would love us to solve.

Special thanks to Vadim Shalamov for describing and wrapping the example for a solution.

Shoot us a comment here, and happy coding!

13/15 Wentworth ave, Sydney NSW 2000, Australia

Review privacy policy